By Paul Scharre

Editor’s note: This is the first article in a six-part series, The Coming Swarm, on military robotics and automation as a part of the joint War on the Rocks-Center for a New American Security Beyond Offset Initiative.

1. BETWEEN A ROOMBA AND A TERMINATOR: WHAT IS AUTONOMY?

Paul Scharre

February 18-2015.

Photo credit: Eirik Newth

Department of Defense leaders have stated that robotics and autonomous systems will be a key part of a new “offset strategy” to sustain American military dominance, but what is autonomy? Uninhabited, or unmanned, systems have played important roles in Iraq and Afghanistan, from providing loitering overhead surveillance to defusing bombs. They have operated generally in a remote-controlled context, however, with only limited automation for functions like takeoff and landing. Numerous Defense Department roadmap and vision documents depict a future of uninhabited vehicles with greater autonomy, transitioning over time to true robotic systems. What that means for how militaries fight, however, is somewhat murky.

What does it mean for a robot to be “fully autonomous?” How much machine intelligence is required to reach “full autonomy,” and when can we expect it? And what is the role of the human warfighter in this proposed future with robots running loose, untethered from their human controllers?

Confusion about the term “autonomy” is a problem in envisioning the answers to these questions. The word “autonomy” is used by different people in different ways, making communicating about where we are headed with robotic systems particularly challenging. The term “autonomous robot” might mean a Roomba to one person and a Terminator to another! Writers or presenters on this topic often articulate “levels of autonomy,” but their levels rarely agree, leading a recent Defense Science Board report on autonomy to throw out the concept of “levels” of autonomy altogether.

In the interest of adding some clarity to this issue, I want to illuminate how we use the word, why it is confusing, and how we can be more precise. I can’t change the fact that “autonomy” means so many things to so many people, and I won’t try to shoehorn all of the possible uses of autonomy into yet another chart of “levels of autonomy.” But I can try to inject some much needed precision into the discussion.

What is “Autonomy?”

In its simplest form, autonomy is the ability of a machine to perform a task without human input. Thus an “autonomous system” is a machine, whether hardware or software, that, once activated, performs some task or function on its own. A robot is an uninhabited system that incorporates some degree of autonomy, generally understood to include the ability to sense the environment and react to it, at least in some crude fashion.

Autonomous systems are not limited to uninhabited vehicles, however. In fact, autonomous, or automated, functions are included on many human-inhabited systems today. Most cars today include anti-lock brakes, traction and stability control, power steering, emergency seat belt retractors, and air bags. Higher-end cars may include intelligent cruise control, automatic lane keeping, collision avoidance, and automatic parking. For military aircraft, automatic ground collision avoidance systems (auto-GCAS) can similarly take control of a human-piloted aircraft if a pilot becomes disoriented and is about to fly into terrain. And modern commercial airliners have a high degree of automation available throughout every phase of a flight. Increased automation or autonomy can have many advantages, including increased safety and reliability, improved reaction time and performance, reduced personnel burden with associated cost savings, and the ability to continue operations in communications-degraded or denied environments.

Parsing out how much autonomy a system has is important for understanding the challenges and opportunities associated with increasing autonomy. There is a wide gap, of course, between a Roomba and a Terminator. Rather than search in vain for a unified framework of “levels of autonomy,” a more fruitful direction is to think of autonomy as having three main axes, or dimensions, along which a system can vary. These dimensions are independent, and so autonomy does not exist on merely one spectrum, but three spectrums simultaneously.

The Three Dimensions of Autonomy

What makes understanding autonomy so difficult is that people tend to use the same word to refer to three completely different concepts:

The human-machine command-and-control relationship

The complexity of the machine

The type of decision being automated

These are all important features of autonomous systems, but they are different ideas.

The human-machine command-and-control relationship

Machines that perform a function for some period of time, then stop and wait for human input before continuing, are often referred to as “semiautonomous” or “human in the loop.” Machines that can perform a function entirely on their own but have a human in a monitoring role, with the ability to intervene if the machine fails or malfunctions, are often referred to as “human-supervised autonomous” or “human on the loop.” Machines that can perform a function entirely on their own and humans are unable to intervene are often referred to as “fully autonomous” or “human out of the loop.” In this sense, “autonomy” is not about the intelligence of the machine, but rather its relationship to a human controller.

The complexity of the machine

The word “autonomy” is also used in a completely different way to refer to the complexity of the system. Regardless of the human-machine command-and-control relationship, words such as “automatic,” “automated,” and “autonomous” are often used to refer to a spectrum of complexity of machines. The term “automatic” is often used to refer to systems that have very simple, mechanical responses to environmental input. Examples of such systems include trip wires, mines, toasters, and old mechanical thermostats. The term “automated” is often used to refer to more complex, rule-based systems. Self-driving cars and modern programmable thermostats are examples of such systems. Sometimes the word “autonomous” is reserved for machines that execute some kind of self-direction, self-learning, or emergent behavior that was not directly predictable from an inspection of its code. An example would be a self-learning robot that taught itself how to walk or the Nest “learning thermostat.”

Others will reserve the word “autonomous” only for entities that have intelligence and free will, but these concepts hardly add clarity. Artificial intelligence is a loaded term that can refer to a wide range of systems, anywhere from those that exhibit near-human or super-human intelligence in a narrow domain, such as playing chess (Deep Blue), playing Jeopardy (Watson), or programming subway repair schedules, to potential future systems that might have human or super-human general intelligence. But whether general intelligence leads to free will, or whether humans even have free will, is itself debated.

What is particularly challenging is that there are no clear boundaries between these degrees of complexity, from “automatic” to “automated” to “autonomous” to “intelligent,” and different people may disagree on what to call any given system.

Type of function being automated

Ultimately, it is meaningless to refer to a machine as “autonomous” or “semiautonomous” without specifying the task or function being automated. Different decisions have different levels of complexity and risk. A mine and a toaster offer radically different levels of risk, even though both have humans “out of the loop” once activated and both use very simple mechanical switches. The task being automated, however, is much different. Any given machine might have humans in complete control of some tasks and might autonomously perform others. For example, an “autonomous car” drives from point A to point B on its own, but a person is still choosing the final destination. So it is only autonomous with respect to some functions.

“Full Autonomy” is a Meaningless Term

From this perspective, the question of when we will get to “full autonomy” is meaningless. There is not a single spectrum along which autonomy moves. The paradigm of human vs. machine is a common science fiction meme, but a better framework would be to ask which tasks are done by a person and which by a machine. A recent guidance document on autonomy from a number of NATO countries came to a similar conclusion, recommending a framework of thinking about “autonomous functions” of systems, rather than characterizing an entire vehicle or system as “autonomous.”

Importantly, these three dimensions of autonomy are independent. The intelligence or complexity of the machine is a separate concept from the tasks being performed. Increased intelligence or more sophisticated machine reasoning to perform a task does not necessarily equate to transferring control over more tasks from the human to the machine. Similarly, the human-machine command-and-control relationship is a different issue from complexity or tasks performed. A thermostat functions on its own without any human supervision or intervention when you leave your house, but it still has a limited set of functions it can perform.

Instead of thinking about “full autonomy,” we should focus on operationally-relevant autonomy: sufficient autonomy to get the job done. Depending on the mission, the environment, and communications, which functions are required to achieve operationally-relevant autonomy could look very different in different scenarios. In the air domain, operationally-relevant autonomy might mean the ability for the aircraft to takeoff, land, and fly point-to-point on its own in response to human taskings, with a human overseeing operations and making mission-level decisions, but not physically piloting by stick and rudder. In that case, for highly automated aircraft like the Global Hawk or MQ-1C Gray Eagle, operationally-relevant autonomy is here today. In communications-denied environments, autonomy is sufficient today for an aircraft to perform surveillance missions, jamming, or striking pre-programmed fixed targets, although striking targets of opportunity would require a human in the loop. For ground vehicles, operationally-relevant autonomy might similarly mean the ability for the vehicle to drive itself in response to human taskings without a human operator physically driving the vehicle. Operationally-relevant autonomy for ground vehicles is here today for leader-follower convoy operations or human-supervised operations, but not quite yet for communications-denied navigation in cluttered environments with potential obstacles or people. In the undersea environment where communications are challenging but there are fewer obstacles, operationally-relevant autonomy is already here today, as uninhabited undersea vehicles can already perform missions without direct human supervision.

“Autonomy” is not some point we arrive at in the future. Autonomy is a characteristic that will be increasingly incorporated into different functions on military systems, much like increasingly autonomous functions on cars: automatic lane keeping, collision avoidance, self-parking, etc. As this occurs, humans will still be required for many military tasks, particularly those involving the use of force. No system will be “fully autonomous” in the sense of being able to perform all possible military tasks on its own. Even a system operating in a communications-denied environment will still be bounded in terms of what it is allowed to do. Humans will still set the parameters for operation and will deploy military systems, choosing the mission they are to perform.

So the next time someone tells you, “it’s autonomous,” ask for a little more precision on what, exactly, they mean.

One Response

February 21, 2015 at 5:59 pm · Reply →

Paul…another great and insightful article. If I may, I’d like to proffer another dimension of autonomy – the “effect”. If the autonomy of a roomba fails, the most likely effect is probably an unclean room, and the most unlikely yet worst case is that some priceless heirloom is destroyed. If the autonomy of a toaster fails, the most likely effect is burnt toast, and the unlikely worst case is a fire that destroys the entire home. We clearly accept the risk of those effects every day. When it comes to kinetic systems, the purpose of which is to destroy or kill like the Terminator, has a more apparent direct likely effect on human life which causes people to question whether such systems exist. I think the interesting part is when that toaster does fail, and that unlikely worst case of a fire kills someone innocent who was sleeping through breakfast. Take this to a cyber event using a virus designed to move through a computer system. The primary intent may be to infect some aspects of system, but there are plenty of plausible scenarios where this may result in the loss of human lives (power goes out, and critical medicines can no longer be refrigerated for one). The intended effect of that autonomous virus may not be human depth, but the possible effects include this. I guess the point to my rambling is that autonomy is often limited by the intended or likely effects, when the spectrum of effects should be considered.

Bob Wagner

2. ROBOTS AT WAR AND THE QUALITY OF QUANTITY

February 26-2015.

Photo credit: asuscreative

The U.S. Department of Defense has launched the search for a “third offset strategy,” an approach to sustain U.S. military technological superiority against potential adversaries. But, for a number of reasons, this strategy is different than the previous two. Even the name “offset” may not be valid. The first two strategies were aimed at “offsetting” the Soviet numerical advantage in conventional weapons in Europe, first with U.S. nuclear weapons and later with information-enabled precision-strike weapons. But this time around, it may be the United States bringing numbers to the fight.

Uninhabited and autonomous systems have the potential to reverse the multi-decade trend in rising platform costs and shrinking quantities, allowing the U.S. military to field large numbers of assets at affordable cost. The result could be that instead of “offsetting” a quantitative advantage that an adversary is presumed to start with, the United States could be showing up with better technology and greater numbers.

The value of mass

The United States out-produced its enemies in World War II. By 1944, the United States and its Allies were producing over 51,000 tanks a year to Germany’s 17,800 and over 167,000 planes a year to the combined Axis total of just under 68,000. Even though many of Germany’s tanks and aircraft were of superior quality to those of the Allies, they were unable to compensate for the unstoppable onslaught of Allied iron. Paul Kennedy writes in The Rise and Fall of Great Powers:

…by 1943-1944 the United States alone was producing one ship a day and one aircraft every five minutes! … No matter how cleverly the Wehrmacht mounted its tactical counterattacks on both the western and eastern fronts until almost the last months of the war, it was to be ultimately overwhelmed by the sheer mass of Allied firepower.

The Cold War saw a shift in strategy, with the United States instead initially relying on nuclear weapons to counter the growing Soviet conventional arsenal in Europe, the first “offset strategy.” By the 1970s, the Soviets had achieved a three-to-one overmatch against NATO in conventional forces and a rough parity in strategic nuclear forces. In response to this challenge, the U.S. military adopted the second offset strategy to counter Soviet numerical advantages with qualitatively superior U.S. weapons: stealth, advanced sensors, command and control networks, and precision-guided weapons.

The full effect of these weapons was seen in 1991, when the United States took on Saddam Hussein’s Soviet-equipped army. Casualty ratios in the Gulf War ran an extremely lopsided 30-to-1. Iraqi forces were so helpless against American precision airpower that the White House eventually terminated the war earlier than planned because media images of the so-called “highway of death” made American forces seem as if they were “cruelly and unusually punishing our already whipped foes,” in the words of Gulf War air commander General Chuck Horner. Precision-guided weapons, coupled with sensors to find targets and networks to connect sensors and shooters, allowed the information-enabled U.S. military to crush Iraqi forces fighting with unguided munitions.

What happens when they have precision-guided weapons too?

The proliferation of precision-guided weapons to other adversaries is shifting the scales, however, bringing mass once again back into the equation. The United States military can expect to face threats from adversary precision-guided munitions in future fights. At the same time, ever-rising platform costs are pushing U.S. quantities lower and lower, presenting adversaries with fewer targets on which to concentrate their missiles. U.S. platforms may be qualitatively superior, but they are not invulnerable. Salvos of enemy missiles threaten to overwhelm the defenses of U.S. ships and air bases. Even if missile defenses can, in principle, intercept incoming missiles, the cost-exchange ratio of attacking missiles to defending interceptors favors the attacker, meaning U.S. adversaries need only purchase more missiles to saturate U.S. defenses.

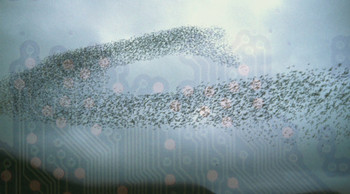

Enter the swarm

Uninhabited systems offer an alternative model, with the potential to disaggregate expensive multi-mission systems into a larger number of smaller, lower cost distributed platforms. Because they can take greater risk and therefore be made low-cost and attritable – or willing to accept some attrition – uninhabited systems can be built in large numbers. Combined with mission-level autonomy and multi-vehicle control, large numbers of low-cost attritable robotics can be controlled en masse by a relatively small number of human controllers.

Large numbers of uninhabited vehicles have several potential advantages:

Combat power can be dispersed, giving the enemy more targets, forcing the adversary to expend more munitions.

Platform survivability is replaced with a concept of swarm resiliency. Individual platforms need not be survivable if there are sufficient numbers of them such that the whole is resilient against attack.

Mass allows the graceful degradation of combat power as individual platforms are attrited, as opposed to a sharp loss in combat power if a single, more exquisite platform is lost.

Offensive salvos can saturate enemy defenses. Most defenses can only handle so many threats at one time. Missile batteries can be exhausted. Guns can only shoot in one direction at a time. Even low cost-per-shot continuous or near-continuous fire weapons like high energy lasers can only engage one target at a time and generally require several seconds of engagement to defeat a target. Salvos of guided munitions or uninhabited vehicles can overwhelm enemy defenses such that “leakers” get through, taking out the target.

These advantages could translate to new, innovative approaches for using uninhabited systems, just a few of which are explored below.

The miniature air-launched decoy (MALD) and miniature air-launched decoy – jammer (MALD-J) – loitering air vehicles that are not quite munitions and are not aircraft – hint at the potential of small, loitering uninhabited air vehicles and air-mobile robots. The MALD functions as an aerial decoy to deceive enemy radars, while the MALD-J jams enemy radars. Similar future uninhabited air vehicles, launched from aircraft, ships or submarines, could saturate enemy territory with overwhelming numbers of low-cost, expendable systems. Like D-Day’s “little groups of paratroopers” dropped behind enemy lines, they could sow confusion and wreak havoc on an enemy.

Loitering electronic attack weapons could create an electronic storm of jamming, decoys and high-powered microwaves. Small air vehicles could autonomously fly down roads searching for mobile missiles and, once found, relay their coordinates back to human controllers for attack.

Such aircraft would be small and would require a means of getting to the fight. This could include submarines parked off an enemy’s coast, uninhabited missile boats that race to the enemy’s coastline before launching their payloads into the air, large bomber or cargo aircraft, or even uninhabited undersea pods like DARPA’s Hydra program.

A similar approach could help the Army expand combat power on land. The Army has thousands of fully functional ground vehicles such as HMMWVs and M113 armored personnel carriers that will not be used in future conflicts because they lack sufficient armor to protect human occupants. At very low cost, however, on the order of tens of thousands of dollars apiece, these vehicles could be converted into robotic systems. With no human on board, their lack of heavy armor would not be a problem.

This could be done at low cost using robotic appliqué kits – sensors and command systems that are applied to existing vehicles to convert them for remote or autonomous operation. Robotic appliqué kits have already been used to convert construction vehicles into remotely operated Bobcats and bulldozers to counter improvised explosive devices.

Applied to existing vehicles, robotic appliqué kits could give the Army a massive robot ground force at extremely low cost. The sheer mass of such a force, and the ability to apply it in sacrificial or suicidal missions, could change how the Army approaches maneuver warfare.

Uninhabited ground vehicles could be the vanguard of an advance, allowing robots to be the “contact” part of a “movement to contact.” Robotic vehicles could be used to flush out the enemy, flank or surround them, or launch feinting maneuvers. Uninhabited vehicles could be air-dropped behind enemy lines on suicide missions. Scouting for targets, they could be used by human controllers for direct engagements or could send back coordinates for indirect fire or aerial attacks.

These are just some of the possibilities that greater mass could bring in terms of imposing costs on adversaries and unlocking new concepts of operation. Experimentation is needed, both in simulations and in realistic real-world environments, to better understand how warfighters would employ large numbers of low-cost expendable robotic systems.

And there would be other issues to work out. Robotic systems would still require maintenance, although mitigation measures could minimize the burden. Modular design would allow easy replacements when parts broke, allowing maintainers to cannibalize other systems for spare parts. And uninhabited systems could be kept “in a box” during peacetime with only a limited number used for training, much like missiles. For some applications where uninhabited systems would be needed in wartime but not in peacetime, mixed-component units that leverage National Guard and Reserve maintainers may be a cost-effective way to manage personnel.

A new paradigm for assessing qualitative advantage

The point of building large numbers of lower cost systems is not to field forces on the battlefield that are qualitatively inferior to the enemy. Rather, it is to change the notion of qualitative superiority from an attribute of the platform to an attribute of the swarm. The swarm, as a whole, should be more capable than an adversary’s military forces. That is, after all, the purpose of combat: to defeat the enemy. What uninhabited systems enable, is a disaggregation of that combat capability into larger numbers of less exquisite systems which, individually, may be less capable but in aggregate are superior to the enemy’s forces.

Disaggregating combat power will not be possible in all cases, and large (and expensive) vehicles will still be needed for many purposes. Expensive, exquisite systems will inevitably be purchased in small numbers, however, and so where possible they should be supplemented by larger numbers of lower-cost systems in a high-low mix. Neither a cheap-and-numerous nor an expensive-and-few approach will work in every instance, and U.S. forces will need to field a mix of high and low-cost assets to bring the right capabilities to bear – and in the right numbers – in future conflicts.

3. UNLEASH THE SWARM: THE FUTURE OF WARFARE

March 4, 2015

Image: Adapted from D. Dibenski (public domain) and Reilly Butler (CC)

Could swarms of low-cost expendable systems change how militaries fight? Last November, Under Secretary of Defense Frank Kendall asked the Defense Science Board to examine a radical idea: “the use of large numbers of simple, low cost (i.e. ‘disposable’) objects vs. small numbers of complex (multi-functional) objects.” This concept is starkly at odds with decades-long trends in defense acquisitions toward smaller numbers of ever-more expensive, exquisite assets. As costs have risen, the number of fighting platforms in the U.S. inventory has steadily declined, even in spite of budget growth. For example, from 2001 to 2008, the U.S. Navy and Air Force base (non-war) budgets grew by 22% and 27% percent, respectively, adjusted for inflation. Yet the number of ships in the U.S. military inventory decreased by 10% and the number of aircraft by 20%. The result is ever-diminishing numbers of assets, placing even more demands on the few platforms remaining, a vicious spiral that rises costs even further and pushes numbers even lower. Over thirty years ago, Norm Augustine warned that:

In the year 2054, the entire defense budget will purchase just one tactical aircraft. This aircraft will have to be shared by the Air Force and Navy 3½ days each per week except for leap year, when it will be made available to the Marines for the extra day.

But we need not wait until 2054 when the U.S. military only has one combat aircraft for “Augustine’s Law” to be a problem. It is here today.

Kendall is joined by a growing number of voices calling for a paradigm shift: from the few and exquisite to the numerous and cheap. T.X. Hammes wrote for WOTR in July of last year that the future of warfare was the “small, many, and smart” over the few and exquisite. And none other than the current Deputy Secretary of Defense Bob Work wrote back in January of 2014:

Moreover, miniaturization of robotic systems would enable the rapid deployment of massive numbers of platforms – saturating an adversary’s defenses and enabling the use of swarming concepts of operation that have powerful potential to upend more linear approaches to war-fighting.

Overwhelming an adversary through mass has major advantages, but a deluge is not a swarm. The power of swarming goes beyond overwhelming an adversary through sheer numbers. In nature, swarming behavior allows even relatively unintelligent animals like ants and bees to exhibit complex collective behavior, or “swarm intelligence.” Similarly, autonomous cooperative behavior among distributed robotic systems will enable not only greater mass on the battlefield, but also greater coordination, intelligence, and speed.

What is a swarm?

A swarm consists of disparate elements that coordinate and adapt their movements in order to give rise to an emergent, coherent whole. A wolf pack is something quite different from a group of wolves. Ant colonies can build structures and wage wars, but a large number of uncoordinated ants can accomplish neither. Harnessing the full potential of the robotics revolution will require building robotic systems that are able to coordinate their behaviors, both with each other and with human controllers, in order to give rise to coordinated fire and maneuver on the battlefield.

Swarming in nature can lead to complex phenomena

Swarms in nature are wholly emergent entities that arise from simple rules. Bees, ants, and termites are not individually intelligent, yet their colonies can exhibit extraordinarily complex behavior. Collectively, they are able to efficiently and effectively search for food and determine the optimal routes for bringing it back to their nests. Bees can “vote” on new nesting sites, collectively deciding the optimal locations. Ants can kill and move very large prey by cooperating. Termites can build massive structures, and ants can build bridges or float-like structures over water using their own bodies.

These collective behaviors emerge because of simple rules at the individual level that lead to complex aggregate behavior. A colony of ants will, over time, converge on an optimal route back from a food source because each individual ant leaves a trail of pheromones behind it as it heads back to the nest. More ants will arrive back at the nest sooner via the faster route, leading to a stronger pheromone trail, which will then cause more ants to use that trail. No individual ant “knows” which trail is fastest, but collectively the colony nonetheless converges on the optimal route.

Robot swarms differ from animal swarms in important ways

Like ants, termites, and bees, simple rules governing the behavior of robots can lead to aggregate swarming behavior for cooperative scouting, foraging, flocking, construction, and other tasks. Robot swarms can differ from those found in nature in several interesting and significant ways. Robot swarms can leverage a mix of direct and implicit communication methods, including sending complex signals over long distances. Robot swarms may consist of heterogeneous agents – a mix of different types of robots working together to perform a task. For example, the “swarmanoid” is a heterogeneous swarm of “eye-bots, hand-bots, and foot-bots” that work together to solve problems.

The most important difference between animal and robot swarms is that robot swarms are designed while swarm behavior in nature has evolved. Swarms in nature have no central controller or “common operating picture.” Robot swarms, on the other hand, ultimately operate at the direction of a human being to perform a specific task.

Concepts for military swarming are largely unexplored

Increasingly autonomous robotic systems allow the potential for swarming behavior, with one person controlling a large number of cooperative robotic systems. Just last year, for example, the Office of Naval Research demonstrated a swarm of small boats on the James River, conducting a mock escort of a high-value ship during a strait transit. Meanwhile, researchers at the Naval Postgraduate School are investigating the potential for swarm vs. swarm warfare, building up to a 50-on-50 swarm aerial dogfight.

These developments raise important questions: How does one fight with a swarm? How does one control it? What are its weaknesses and vulnerabilities? Researchers are just beginning to understand the answers to these questions. At a higher level, though, a look at the historical evolution of conflict can help shed light on how we should think about the role that swarming plays in warfare.

From melee to mass to maneuver to swarm

In 2005, John Arquilla and David Ronfeldt released a groundbreaking monograph, Swarming and the Future of Conflict. It articulates an evolution of four doctrinal forms of conflict across history: melee, mass, maneuver, and swarm.

Ronfeldt and Arquilla proposed that, over time as military organizations incorporated greater communications, training, and organization, they were able to fight in an increasingly sophisticated manner, leveraging more advanced doctrinal forms, with each evolution superior to the previous. Today, they argued, militaries predominantly conduct maneuver warfare. But swarming would be the next evolution.

From melee to mass

In ancient times, warriors fought in melee combat, fighting as uncoordinated individuals (think: Braveheart). The first innovation in doctrine was the invention of massed formations like the Greek Phalanx that allowed large numbers of individuals to fight in organized ranks and files as a coherent whole, supporting one another.

Massed formations have the advantage of synchronizing the actions of combatants, and were a superior innovation in combat. But massing requires greater organization and training, as well as the ability for individuals to communicate with one another in order to act collectively.

Melee vs Mass

In melee fighting, combatants fight as individuals, uncoordinated. Massed formations have the advantage of synchronizing the actions of combatants, allowing them to support one another in combat. Massing requires greater organization, however, as well as the ability for individuals to communicate to one another in order to act as a whole.

From mass to maneuver

The next evolution in combat was maneuver warfare, which combined the benefits of massed elements with the ability for multiple massed elements to maneuver across long distances and mutually support each other. This was a superior innovation to mass on its own because it allowed separate formations to move as independent elements to outflank the enemy and force the enemy into a disadvantageous fighting position. Maneuver warfare requires greater mobility than massing, however, as well as the ability to communicate effectively between separated fighting elements.

Mass vs Maneuver

Maneuver warfare combines the advantages of mass with increased mobility. In maneuver warfare, mutually supporting separate massed formations move as independent elements to outflank the enemy and force the enemy into a disadvantageous fighting position. Maneuver warfare requires greater mobility than massing as well as the ability to communicate effectively between separated fighting elements.

From maneuver to swarm

Arquilla and Ronfeldt’s hypothesis was that maneuver was not the culmination of combat doctrine, but rather another stage of evolution that would be superseded by swarming. In swarming, large numbers of dispersed individuals or small groups coordinate their actions to fight as a coherent whole.

Swarm warfare, therefore, combines the highly decentralized nature of melee combat with the mobility of maneuver and a high degree of organization and cohesion, allowing a large number of individual elements to fight collectively. Swarming has different organization and communication requirements than maneuver warfare, since the number of simultaneously maneuvering and fighting individual elements is significantly larger.

Maneuver vs Swarm

Swarm warfare combines the highly decentralized nature of melee combat with the mobility of maneuver and a high degree of organization and cohesion, allowing a large number of individual elements to fight collectively. Swarming has much higher organization and communication requirements than maneuver warfare, since the number of simultaneously maneuvering and fighting individual elements is significantly larger.

Challenges to fighting as a swarm

These four types of warfare – melee, mass, maneuver, and swarm – require increasingly sophisticated levels of command-and-control structures and social and information organization. Examples of all four forms, including swarming, can be found dating to antiquity, but widespread use of higher forms of warfare did not occur until social and information innovations, such as written orders, signal flags, or radio communication, enabled coherent massing and maneuver.

Swarming tactics date back to Genghis Khan, but have often played a less-than-central role in military conflict. Recent examples of swarming in conflict can be seen in extremely decentralized organizations like protest movements or riots. In 2011, London rioters were able to communicate, via London’s Blackberry network, the location of police barricades. They were then able to rapidly disperse to avoid the barricades and re-coalesce in new areas to continue looting. The police were significantly challenged in their ability to contain the rioters, since the rioters actually had better real-time information than the police. Moreover, because the rioters were an entirely decentralized organization, they could more rapidly respond to shifting events on the ground. Rioters did not need to seek permission to change their behavior; individuals simply adjusted their actions based on new information they received.

This example points to some of the challenges in swarming. Effectively employing swarming requires a high degree of information flow among disparate elements; otherwise the fighting will rapidly devolve into melee combat. Individual elements must not only be connected with one another and able to pass information, but also able to process it quickly. It also depends upon the ability to treat individual elements as relatively sacrificial, since if they are isolated they may be subject to being overwhelmed by larger, massed elements. Finally, and perhaps most challenging for military organizations, swarming depends on a willingness to devolve a significant amount of control over battlefield execution to the fighting elements closest to the battlefield’s edge. Thus, swarming is in many ways the ultimate in commander’s intent and decentralized execution. The resulting combat advantage is far greater speed of reaction to enemy movements and battlefield events in real-time.

The Operational Advantages of Robot Swarms

The information-processing and communications requirements of swarming, as well as the requirement to treat individual elements as relatively sacrificial, makes swarming a difficult tactic to employ with people. It is ideal, however, for robotic systems. In fact, as militaries deploy large numbers of low-cost robotic systems, controlling each system remotely as is done today would be cost-prohibitive in terms of personnel requirements. It will also slow down the pace of operations. Autonomous, cooperative behavior of multiple robotic systems operating under human command at the mission level will be necessary to control large numbers of robotic systems. Autonomous, cooperative behavior will also unlock many advantages on the battlefield in terms of greater coordination, intelligence and speed. A few examples are given below:

Coordinated attack and defense – Swarms could be used for coordinated attack, saturating enemy defenses with waves of attacks from multiple directions simultaneously, as well as coordinated defense. Swarms of small boats could defend surface vessels from enemy fast attack craft, shifting in response to perceived threats. Defensive counter-swarms of aerial drones could home in on and destroy attacks from incoming swarms of drones or boats.

Dynamic self-healing networks – Swarming behavior can allow robotic systems to act as dynamic self-healing networks. This can be used for a variety of purposes, such as maintaining surveillance coverage over an area, resilient self-healing communications networks, intelligent minefields or adaptive logistics lines.

Distributed sensing and attack – Swarms can perform distributed sensing and attack. Distributing assets over a wide area can allow them to function as an array with greater sensor fidelity. Conversely, they can also, in principle, conduct distributed focused electronic attack, synching up their electromagnetic signals to provide focused point jamming.

Deception – Cooperative swarms of robotic vehicles can be used for large-scale deception operations, performing feints or false maneuvers to deceive enemy forces. Coordinated emissions from dispersed elements can give the impression of a much larger vehicle or even an entire formation moving through an area.

Swarm intelligence – Robotic systems can harness “swarm intelligence” through distributed voting mechanisms, which could improve target identification, geolocation accuracy, and provide increased resilience to spoofing.

Swarming has tremendous potential on the battlefield for coordinated action, far beyond simply overwhelming an adversary with sheer numbers. However, paradigm shifts in warfare ultimately are derived not just from a new technology, but the combination of technology with new doctrine, organization, and concepts of operation. Concepts for swarming are largely unexplored, but researchers are beginning to conduct experiments to understand how to employ, control and fight with swarms. Because much of the technology behind robotic swarms will come from the commercial sector and will be widely available, there is not a moment to lose. The U.S. military should invest in an aggressive program of experimentation and iterative technology development, linking together developers and warfighters, to harness the power of swarms.

Paul Scharre

Paul Scharre is a Fellow and Director of the 20YY Warfare Initiative at the Center for a New American Security.

From 2008-2013, Mr. Scharre worked in the Office of the Secretary of Defense (OSD) where he played a leading role in establishing policies on unmanned and autonomous systems and emerging weapons technologies. Mr. Scharre led the DoD working group that drafted DoD Directive 3000.09, establishing the Department’s policies on autonomy in weapon systems. Mr. Scharre also led DoD efforts to establish policies on intelligence, surveillance, and reconnaissance (ISR) programs and directed energy technologies. Mr. Scharre was involved in the drafting of policy guidance in the 2012 Defense Strategic Guidance, 2010 Quadrennial Defense Review, and Secretary-level planning guidance. His most recent position was Special Assistant to the Under Secretary of Defense for Policy.

Prior to joining OSD, Mr. Scharre served as a special operations reconnaissance team leader in the Army’s 3rd Ranger Battalion and completed multiple tours to Iraq and Afghanistan. He is a graduate of the Army’s Airborne, Ranger, and Sniper Schools and Honor Graduate of the 75th Ranger Regiment’s Ranger Indoctrination Program.

Mr. Scharre has published articles in Proceedings, Armed Forces Journal, Joint Force Quarterly, Military Review, and in academic technical journals. He has presented at National Defense University and other defense-related conferences on defense institution building, ISR, autonomous and unmanned systems, hybrid warfare, and the Iraq war. Mr. Scharre holds an M.A. in Political Economy and Public Policy and a B.S. in Physics, cum laude, both from Washington University in St. Louis.

* * *

Related story, please click here

Vietnamese text, please click here

More on English topic, please click here

Main homepage: www.nuiansongtra.com

Trang Anh ngữ

Trang Anh ngữ